Introduction

The most compelling IT issues affecting businesses today pertain to data management – there is a lot of data and it is growing at an accelerated pace in various forms. The main reason for data growth is the gathering of extremely large un-structured data sets to gain customer insight in order to secure a competitive business advantage. Added to this, a multitude of new digital sources connected to the “Internet of Things (IoT)”, is expected to generate unprecedented levels of data. All this data needs to be protected to ensure business continuity and to meet legal and regulatory needs. Data protection has traditionally been offered through data replication, both on and off site to prevent against malicious attacks and un-planned events such as hardware failures and disasters. However, this approach further exacerbates the issue of data growth. The pressing questions in data protection therefore are the following:

-

How to curtail data inflation?

-

How to access collected data in a reliable, timely and easy manner?

The volume, velocity and variety of data collected and required to be made available consistently, adds complexity to most existing data protection platforms. There is thus a dire need to have a simple, consistent and scalable data protection platform, which will allow any customer to collect all essential data and use the collective knowledge of data and metadata to address business needs.

The right solution is one which curtails data inflation without compromising on required features while also leveraging current IT trends in architecture to design a platform which is consistent, simple, flexible and cost effective.

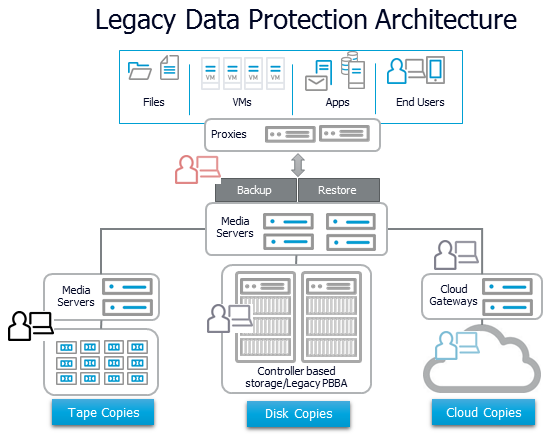

Traditional scale-up platforms consist of storage controllers providing the features and processing for a set of back-end disk enclosures, which serve up storage capacity. Irrespective of the make and model of the storage controller used, this architecture has pre-imposed limits which caps storage capacity and performance growth. This leads to a fork-lift upgrade sometime in the future pointing to a bad ROI and the need for careful planning to avoid disruptions.

Due to the accelerating rate of data growth, in many cases, a scale-up data protection platform becomes obsolete before it can be fully depreciated, thus adding to the cost of doing business.

The Platform

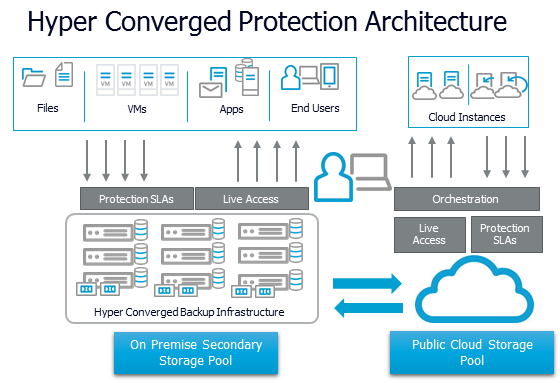

IT customers are in the midst of a technological shift towards simplicity to help with the need to be agile and cost effective. The key consideration in transformation – Simplicity, is hampered by the siloed nature of legacy scale-up architectures. Operating scale-up platforms requires purchase of and knowledge in proprietary products, leading to exorbitant costs for the customer. To address these issues, customers are moving to infrastructure built on modular and cost-effective scale-out architecture. The Scale-out approach brings simplicity and flexible scaling while preserving required features of resiliency and performance. The lack of a scalable, open, simple and efficient IT platform designed for data protection of applications is adding to business cost. Extending data center transformation to data protection and recovery is a key efficiency step which will contribute to better data management. Commvault’s HyperScale data protection platform addresses this need while also providing other data management features. It sets the stage for a services oriented approach to data protection, whether the goal is to operate on-premises or migrate to the Cloud.

HyperScale Data Protection

Hyperconvergence is a software defined integration of dispersed and disparate hardware resources, into a contiguous, shared pool. Typically, compute and storage elements from widely available rack servers are integrated to form a shared pool, which can scale-out as necessary. Performance and capacity of the platform can grow easily through the addition of modular block (set of nodes) or bricks (disks) with less capital expenditure. This scale-out framework is integral to Commvault’s data protection platform, where compute and storage resources from a set of rack-servers is used to deliver MediaAgent functionality. The platform leverages all the features and architectural resiliency, flexibility and scalability of Commvault data protection software, including distributed indexing and deduplication. Additional feature of erasure coding provides for resiliency against hardware failure with minimal overhead. In-place and native access to data managed by Commvault, whether on-premise or in the Cloud, eliminates un-necessary movement of data and adds to faster access without the need to restore to primary storage. Commvault’s HyperScale data protection platform allows for the increase of compute and storage resources concurrently for almost linear scaling of performance and capacity to cater to the data protection needs of any enterprise. The data protection software forms a contiguous storage pool which is purely software defined and with no dependency on the underlying hardware. The solution is capable of managing applications which could span unstructured and structured data on bare-metal, virtual or in the cloud and running on various infrastructure. The end result is a data protection platform which can scale as required while meeting the Service Level Agreement (SLA) of any business.