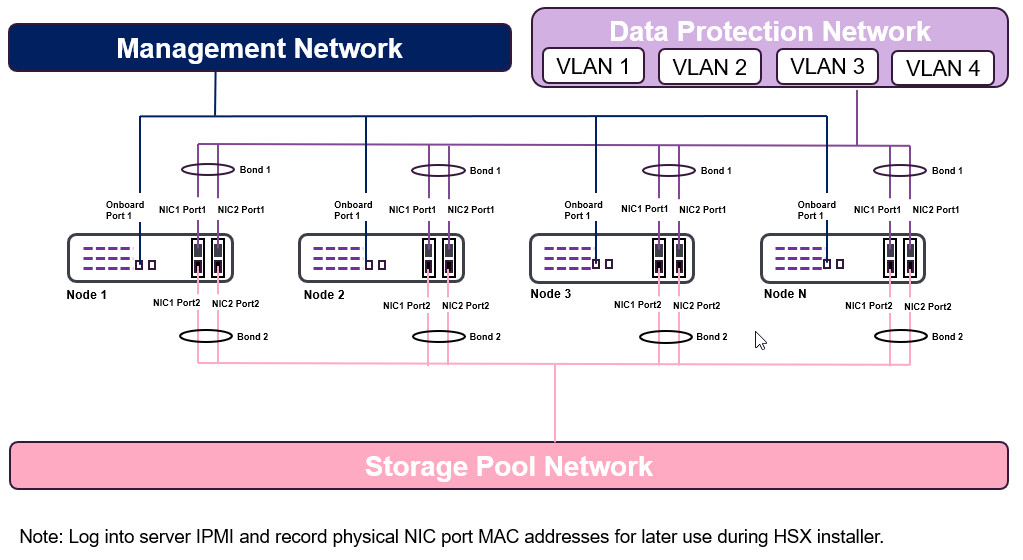

This topology combines bonded VLAN topology with the 1 GbE management interface.

-

This allows multiple tagged VLANs to connect to the appliance using the bonded data protection interface. This also allows connection to multiple non-routable networks used to avoid sending backup traffic through a router or firewall.

-

Management interface can be used to route management traffic, which leverages one of the built-in 1 GbE copper interfaces for management traffic . Management interface will require additional cabling.

In this topology, each node requires the following connections:

-

2 - 10 GbE bonded ports for data protection which will connect to multiple tagged VLANs to transfer data to and from the clients being protected and connect to the CommServe server. This will include connections to:

-

2 or more VLAN IDs

-

2 or more IP addresses based on the number of VLANs

One of the VLANs must remain routable to connect to the CommServe server.

-

-

2- 10 GbE bonded ports for storage pool which is a dedicated private network, used for communication and replication between the HyperScale nodes.

VLAN interfaces cannot be configured on the storage pool interface.

-

1 - 1 GbE port for management network, which is used exclusively for all management related communication, such as communicating with the CommServe server, accessing the DNS server, etc.

Management ports cannot be configured with bonding.

Note

This is an extreme scenario where multiple VLANs connect directly to the HyperScale X Appliance nodes over a single physical connection. This is typically used to connect directly to different networks to avoid traversing routers or firewalls that can become a bottleneck, or to connect to isolated networks. This can be combined with the bonded configurations to provide redundancy using either the Active-Backup bonding or the Link Aggregation Control Protocol (LACP). This can also be used with a multi-switch link aggregation protocol such as Virtual Port Channel (VPC), MLAG, or MC-LAG to provide switch level redundancy. Management network can be added for additional flexibility.

-

Each pair will be bonded on the node, so it is treated as one logical connection. If a node encounters a cable, SFP, or network card failures, the node remains operational without any user intervention. This can optionally be setup to connect to 2 switches to provide switch level redundancy.

-

Active-Backup and Link Aggregation Control Protocol (LACP) are the supported bonding modes.

LACP requires the switch(s) to support it as well. When using LACP, each pair of ports should be configured as an active port-channel, and not configured to negotiate the aggregation protocol.

Network Requirements

In this topology, the number of IP addresses required depends on the number of VLANs. For example, if you have 3 VLANs, 10, 20 and 30, you will require 3 IP addresses per node, as follows:

-

2 - 10 GbE bonded ports connected to the VLAN IP addresses for each of the VLANs used for data protection.

Out of the available VLANs, one network should be routable and the other network(s) must be non-routable. The routable network will be used for CommServe server registration, in addition to data protection operations. All the other VLANs will be used exclusively for data protection operations.

-

2 - 10 GbE bonded port for the storage pool which is a dedicated private network, used for communication and replication between the HyperScale nodes. This requires a corresponding IP address for the storage pool network.

-

1 – 1 GbE port for the management network, which is connected to a routable network with DNS access. This requires a corresponding IP address for the management network.

Note

Data Protection, storage pool and management networks MUST be on separate subnets.

The following network names and IP addresses are required for this topology:

|

Node 1 |

Node 2 |

Node 3 |

Node n |

|

|---|---|---|---|---|

|

Data Protection Fully Qualified Domain Name* |

|

|

|

|

|

Data Protection VLAN Address* |

|

|

|

|

|

Data Protection Netmask* |

|

|

|

|

|

Data Protection Gateway* |

|

|

|

|

|

Data Protection DNS 1* |

|

|

|

|

|

Data Protection DNS 2 |

|

|

|

|

|

Data Protection DNS 3 |

|

|

|

|

|

Storage Pool IP Address* |

|

|

|

|

|

Storage Pool Netmask* |

|

|

|

|

|

Management IP Address* |

|

|

|

|

|

Management Netmask* |

|

|

|

|

* Required fields The following information is required for the VLANs. (The following section is provided with examples for illustrative purposes. Replace them with the appropriate IP addresses in your environment.)

|

VLAN1 |

|

|

|

|

|---|---|---|---|---|

|

VLAN1 Description |

Infrastructure |

|

|

|

|

VLAN1 ID |

10 |

|

|

|

|

VLAN1 Address |

172.16.10.101 |

172.16.10.102 |

172.16.10.103 |

|

|

VLAN1 Netmask |

255.255.255.0 |

|||

|

VLAN2 |

|

|

|

|

|

VLAN2 Description |

Marketing |

|

|

|

|

VLAN2 ID |

20 |

|

|

|

|

VLAN2 Address |

172.16.20.101 |

172.16.20.102 |

172.16.20.103 |

|

|

VLAN2 Netmask |

255.255.255.0 |

|||

|

VLAN3 |

|

|

|

|

|

VLAN3 Description |

Sales |

|

|

|

|

VLAN3 ID |

30 |

|

|

|

|

VLAN3 Address |

172.16.30.101 |

172.16.30.102 |

172.16.30.103 |

|

|

VLAN3 Netmask |

255.255.255.0 |

Note

If you have more than 3 nodes expand the columns in this table to include all the nodes that you plan to setup.

Similarly. depending on the number of VLANs in your environment, add rows to include the information for all the VLANs.

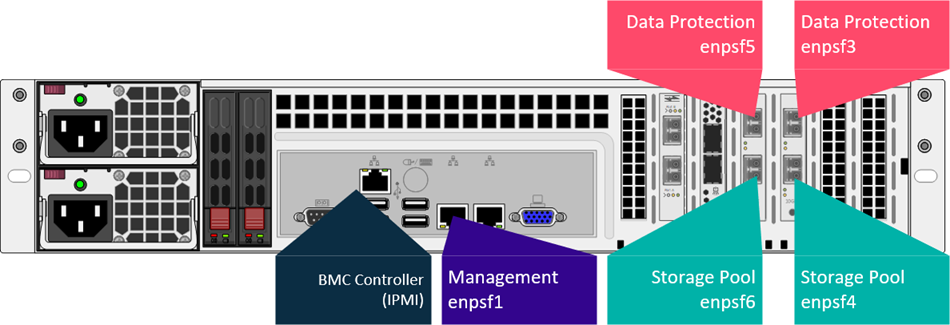

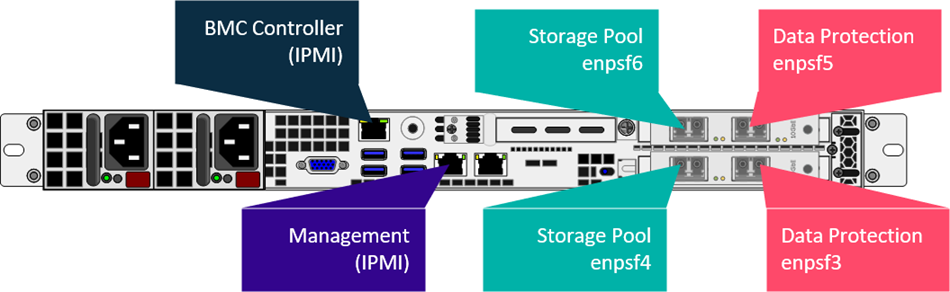

Cabling and Cable Connections

-

Connect the 2 - 10 GbE data protection port from each node to the data protection network.

All data management tasks including backups and restores, are established through the 2 - 10GbE data protection ports.

-

Connect 2- 10 GbE storage pool port from each node to the private storage pool network.

All storage related tasks, including all cluster connectivity for the storage pool network, will be through these 2- 10 GbE storage pool ports.

-

Connect the 1 GbE IPMI port (BMC Controller) from each node to the IPMI or management or utility network for lights out access.

-

Connect the 1 GbE management port to the management network.

All management related activities, such as connecting to the CommServe, accessing the DNS server, etc. will be established through the management port.

HS4300 Connections

HS2300 Connections

Supported Supported Network Cables

The network interface cards included with the HyperScale nodes support the use of either optical fiber or direct attach copper (Twinax) cabling.

Note

Each node has 2 dual port 10 GbE adapters with an LC SFP+ transceiver installed in each port. These can be used for 10 GbE fiber cabling or, can be removed for copper Twinax cabling if desired. (RJ45 based 10 GbE NICs are available from the factory.)

The following cable connectors are supported:

|

For Optical Fiber Connection |

10GBase-SR SFP+ modules are included for all 4 interfaces on each node to support standard or redundant cabling. You will need to provide 10G Base-SR SFP+ modules for your switch and compatible OM3 or OM4 Multi-mode Fiber Cabling. |

|

|---|---|---|

|

For Direct Attach Copper (Twinax) Connections |

Direct Attach Copper cables are not included with an appliance purchase. When purchasing cables, ensure that they meet the following requirements:

|

|

|

10GBASE-T Support (Copper Twisted Pair) |

10GBASE-T is not supported with the included SFP+ network interface cards. Optional 10GBASE-T network cards are available instead of SFP-based configurations as an option at the time of order placement. Contact your Commvault representative for more information. |

Required Network Cables

You will need several network cables to setup the nodes. Make sure that these cables are available when you setup the nodes.

The following network cables are required per node:

-

2x - 1 GbE network cables for the iRMC port and management ports

-

4x 10 GbE network cables for the bonded data protection and storage pool ports

To estimate the total number of network cables required to setup all the nodes, multiply the number of nodes in your cluster with the per node requirement.

For example, if you plan to setup 3 nodes in the cluster you will need the following cables:

-

6x - 1 GbE network cables for the iRMC and management ports

-

12x 10 GbE network cables for the bonded data protection and storage pool ports