Prepare the nodes in the Distributed Storage servers, by installing the OS, assigning hostnames, and then enabling jumbo frames (if supported).

Procedure

On each node in the Distributed Storage server:

-

Create a mirrored volume (RAID-1) on the two SSD’s meant for the OS on each of the nodes. All HDD’s are to remain in the pass-through or JBOD mode.

-

Mount the downloaded ISO as a virtual media and reboot the server.

-

Once the server boots from the mounted ISO, select the following options:

-

RAID-1 volume as the destination to install the OS.

-

Perform a fresh install (do not preserve old partitions or data).

Provide the root user password (

hedvigby default) twice while the OS packages are installed. This step could take up to 30 minutes after which the server needs to be rebooted. -

-

Once the OS is installed, set the hostname, stop Firewall and Network Manager processes, and make the changes persistent using the following commands:

hostnamectl set-hostname <private_hostname> --static systemctl stop firewalld systemctl stop NetworkManager chkconfig firewalld off chkconfig NetworkManager off -

Edit the /etc/sysconfig/selinux file and disable SELinux as follows:

SELINUX=disabled -

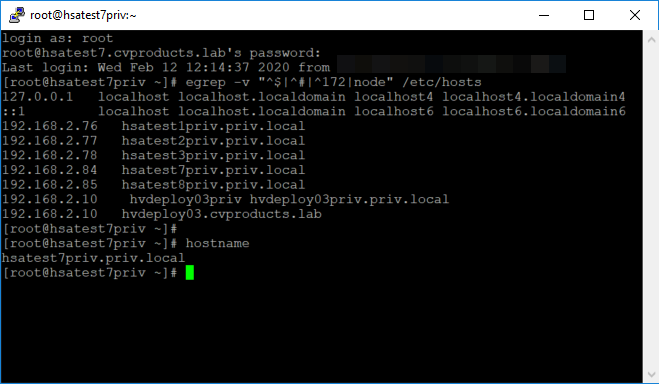

Edit

/etc/hoststo reflect entries for each storageserver in the cluster.The configuration shown in the following example, reflects 3 storage servers and 2 proxies with their respective private IP’s and hostnames.

Note

Assign hostnames to each of the storage servers based on the private storage network. This can be managed through the /etc/hosts files on all storage servers, including the Deployment server. The Client facing data-protection IP on each Distributed Storage proxy may be assigned through DNS.

-

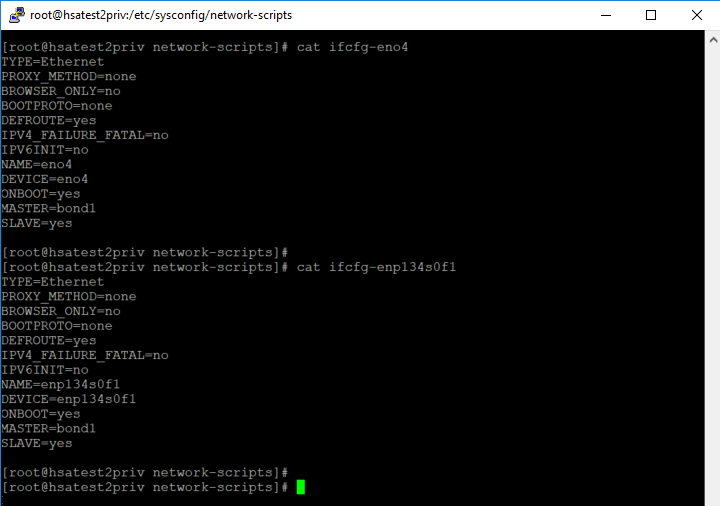

Navigate to

/etc/sysconfig/network-scriptsdirectory, and configure bond1 for private storage traffic as shown in the following example:

-

Reboot the server for the above changes to take effect.

-

If your network supports jumbo frames end-to-end, enable jumbo frames on the host and on ESXi, following these guidelines. It is recommended to enable jumbo frames, if it is available end-to-end.

For more information about enabling jumbo frames on ESXi, refer to VMware documentation for jumbo frames.

-

Log on to each storage cluster node or storage proxy as the root user.

ssh root@<storage cluster node or storage proxy>password: hedvig -

To the /etc/sysconfig/network-scripts/ifcfg-ens160 file, add the following entry:

MTU=9000Important

Do not enter a different value for MTU (maximum transmission unit).

-

Save the file and exit.

-

Restart the network.

systemct1 restart network -

Verify that the jumbo frames are enabled end-to-end by running the following command on one of the hosts:

ping -M do -s <MTU - 28> <remote host>For example:

host1# ping -M do -s 8972 host2

-

-

If RHEL is used as the OS, enable RHEL subscription to download software and updates using the following command:

subscription-manager register --username=<USER_NAME> --password=<PASSWORD> --auto-attach