Before you add your cluster to Commvault to begin protecting it, using kubectl commands, validate that your environment is ready for Commvault backups.

Get the API Server URL

To add your cluster to Commvault, you need to know the kube-apiserver or control plane URL.

-

Command to run:

kubectl cluster-info -

In the following example output, the URL is https://k8s-123-4.home.arpa:6443:

Kubernetes control plane is running at https://k8s-123-4.home.arpa:6443 CoreDNS is running at https://k8s-123-4.home.arpa:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

Verify the Nodes Are Ready

Verify that all cluster nodes (control nodes and worker nodes) have a condition status of Ready—not of DiskPressure, MemoryPressure, PIDPressure, or NetworkUnavailable. For information about node condition statuses, see Conditions.

Also verify that the version of the nodes is supported by Commvault.

For on-premises clusters or infrastructure-based clusters, verify that multiple control plane nodes or master nodes are listed.

-

Command to run:

kubectl get nodes -

Example output:

-

From an Azure Kubernetes Service (AKS) cluster:

NAME STATUS ROLES AGE VERSION aks-agentpool-26889666-vmss000000 Ready agent 3h41m v1.23.5 aks-agentpool-26889666-vmss000001 Ready agent 3h41m v1.23.5 aks-agentpool-26889666-vmss000002 Ready agent 3h41m v1.23.5

-

From a Google Kubernetes Engine (GKE) cluster:

NAME STATUS ROLES AGE VERSION gke-cluster-1-default-pool-a709ed39-hcc6 Ready <none> 9s v1.23.6-gke.1700 gke-cluster-1-default-pool-a709ed39-q497 Ready <none> 9s v1.23.6-gke.1700 gke-cluster-1-default-pool-a709ed39-zkg8 Ready <none> 9s v1.23.6-gke.1700

-

From an on-premises Vanilla Kubernetes single-node dev/test cluster:

NAME STATUS ROLES AGE VERSION k8s-123-4 Ready control-plane,master 77d v1.23.4

-

Verify the CSI Drivers Are Functioning, Registered, and Support Persistent Mode

In order for the Container Storage Interface (CSI) driver to perform provisioning, attach/detach, mount, and snapshot activities, CSI drivers must be installed, functioning, and registered, and they must support the Persistent mode.

-

Command to run:

kubectl get csidrivers -

Example output:

-

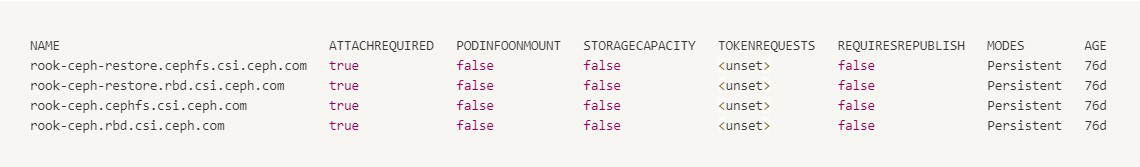

From a cluster that is running the Rook-Ceph CSI driver:

-

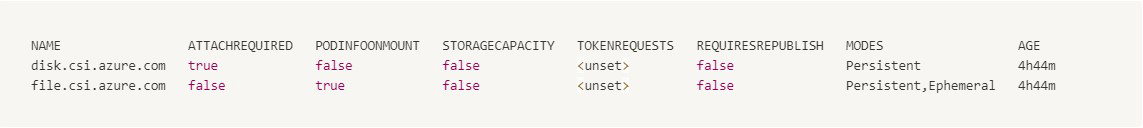

From an Azure Kubernetes Service (AKS) with default CSI drivers installed and configured:

-

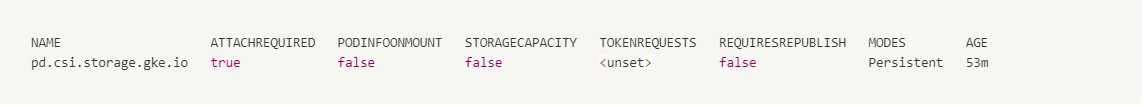

From a Google Kubernetes Engine (GKE) cluster with default CSI drivers installed and configured:

-

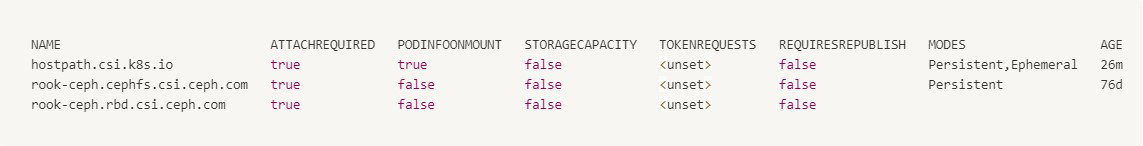

From a Vanilla Kubernetes cluster with Ceph and Hostpath CSI drivers installed and configured:

-

Verify the CSI Pods

Each of your CSI drivers has one or more pods that run to respond to provisioning, attach, detach, and mount requests. Verify that the pods for your CSI driver have a status of Running. Also verify that each CSI driver has 2 pods listed, with 1 pod labeled as the provisioner. Commvault uses the provisioner to create new CSI volumes during restores.

Verify Resource Limits

Check whether your Kubernetes administrator set ResourceQuotas on your cluster.

-

Command to run:

kubectl get resourcequotas -n namespace

If quotas are set, tune the Commvault worker Pod CPU and memory so that the quotas don't prevent Commvault from creating the necessary temporary worker Pods and PersistentVolumeClaim.

Verify the Nodes Have No Active Taints

Verify that there are no taints on your cluster that might prevent backups or restores.

-

Command to run:

kubectl describe node node name | grep -i taints

Where node_name is the name of the node that you want to verify.

-

Example output from a Vanilla Kubernetes server that has no active taints:

Taints: <none>

Verify the StorageClasses Are CSI-Enabled

The PersistentVolumeClaims (PVCs) that you want to protect must be presented by a registered, CSI-enabled StorageClass. Verify that the StorageClasses that have PersistentVolumeClaims that you want to protect use the Container Storage Interface (CSI).

-

Command to run:

kubectl get storageclasses -

Example output from a Vanilla Kubernetes cluster running Hostpath and Ceph Raw-Block Device (RBD) CSI drivers. This cluster also runs a non-CSI-based volume plug-in for provisioning object-based Rook-Ceph storage. Note that, if the provisioner does not contact CSI, then the volume plugin/driver is not supported by Commvault for backups and restores.

Verify You Have a CSI Node That Can Handle Requests

After installing a CSI driver, you can verify that the installation was successful by listing the nodes that have CSI drivers installed on them.

-

Command to run:

kubectl get csinodes -

Example output:

-

From a Azure Kubernetes Service (AKS) cluster that has a default CSI driver installation:

NAME DRIVERS AGE aks-agentpool-26889666-vmss000000 2 5h aks-agentpool-26889666-vmss000001 2 5h aks-agentpool-26889666-vmss000002 2 5h

-

From a Google Kubernetes Engine (GKE) cluster with default CSI driver installation:

NAME DRIVERS AGE gke-cluster-1-default-pool-a709ed39-hcc6 1 73m gke-cluster-1-default-pool-a709ed39-q497 1 73m gke-cluster-1-default-pool-a709ed39-zkg8 1 73m

-

If Necessary, Get Detailed Information About the CSI Drivers Installed on Each Node

You can get detailed information about the CSI drivers installed on each node.

-

Command to run:

kubectl describe csinodes csinode_name

-

Example commands:

-

For an Azure Kubernetes Service (AKS) cluster:

kubectl describe csinodes aks-agentpool-26889666-vmss000000Example output:

Name: aks-agentpool-26889666-vmss000000 Labels: <none> Annotations: storage.alpha.kubernetes.io/migrated-plugins: kubernetes.io/aws-ebs,kubernetes.io/azure-disk,kubernetes.io/azure-file,kubernetes.io/cinder,kubernetes.io/gce-pd CreationTimestamp: Mon, 30 May 2022 16:45:27 +0000 Spec: Drivers: disk.csi.azure.com: Node ID: aks-agentpool-26889666-vmss000000 Allocatables: Count: 4 Topology Keys: [topology.disk.csi.azure.com/zone] file.csi.azure.com: Node ID: aks-agentpool-26889666-vmss000000 Events: <none>

-

For a Google Kubernetes Engine (GKE) cluster:

kubectl describe csinodes gke-cluster-1-default-pool-a709ed39-hcc6Example output:

Name: gke-cluster-1-default-pool-a709ed39-hcc6 Labels: <none> Annotations: storage.alpha.kubernetes.io/migrated-plugins: kubernetes.io/aws-ebs,kubernetes.io/azure-disk,kubernetes.io/cinder,kubernetes.io/gce-pd CreationTimestamp: Mon, 30 May 2022 20:36:18 +0000 Spec: Drivers: pd.csi.storage.gke.io: Node ID: projects/intense-vault-351721/zones/us-central1-c/instances/gke-cluster-1-default-pool-a709ed39-hcc6 Allocatables: Count: 15 Topology Keys: [topology.gke.io/zone] Events: <none>

-

Verify the Pods Do Not Have an Error Status

Verify that your cluster and its hosted applications have a status of Running, Completed, or Terminated—not of Pending, Failed, CrashLoop, Evicted, or Unknown. Although Commvault notifies you of failures for backups and restores, you still need to verify the stability of your cluster before beginning backups.

If you identify a pod that does not have a status of Running, Completed, or Terminated, see Troubleshooting Applications. For more information about states, see Container states.

-

Command to run:

kubectl get pods -A -

Example output:

-

From a newly created Azure Kubernetes Services (AKS) cluster with all pods in the Running status (as expected):

NAMESPACE NAME READY STATUS RESTARTS AGE calico-system calico-kube-controllers-7547b445f6-rgt7l 1/1 Running 1 (5h14m ago) 5h15m calico-system calico-node-6g6hm 1/1 Running 0 5h15m calico-system calico-node-n5xvk 1/1 Running 0 5h15m calico-system calico-node-r9kk9 1/1 Running 0 5h15m calico-system calico-typha-ddbb67d7b-kzk6k 1/1 Running 0 5h15m calico-system calico-typha-ddbb67d7b-mxhhl 1/1 Running 0 5h15m kube-system cloud-node-manager-bdfsj 1/1 Running 0 5h15m kube-system cloud-node-manager-bhkfp 1/1 Running 0 5h15m kube-system cloud-node-manager-kqb5l 1/1 Running 0 5h15m kube-system coredns-87688dc49-wb592 1/1 Running 0 5h18m kube-system coredns-87688dc49-xbbpg 1/1 Running 0 5h14m kube-system coredns-autoscaler-6fb889cdfc-vsm7j 1/1 Running 0 5h18m kube-system csi-azuredisk-node-6p4w8 3/3 Running 0 5h15m kube-system csi-azuredisk-node-g5rct 3/3 Running 0 5h15m kube-system csi-azuredisk-node-n2v72 3/3 Running 0 5h15m kube-system csi-azurefile-node-6ss8s 3/3 Running 0 5h15m kube-system csi-azurefile-node-r5682 3/3 Running 0 5h15m kube-system csi-azurefile-node-rdrgn 3/3 Running 0 5h15m kube-system kube-proxy-8f5fm 1/1 Running 0 5h15m kube-system kube-proxy-sw4hs 1/1 Running 0 5h15m kube-system kube-proxy-wqzlz 1/1 Running 0 5h15m kube-system metrics-server-948cff58d-5d8qv 1/1 Running 1 (5h14m ago) 5h18m kube-system metrics-server-948cff58d-q4lsm 1/1 Running 1 (5h14m ago) 5h18m kube-system tunnelfront-5486fcf877-j8gvc 1/1 Running 0 4h56m tigera-operator tigera-operator-5755874764-vxckp 1/1 Running 0 5h18m

-

From a newly created Google Kubernetes Engine (GKE) cluster with all pods in the Running status (as expected):

NAMESPACE NAME READY STATUS RESTARTS AGE kube-system event-exporter-gke-5dc976447f-szrn9 2/2 Running 0 86m kube-system fluentbit-gke-6896v 2/2 Running 0 85m kube-system fluentbit-gke-69ntr 2/2 Running 0 85m kube-system fluentbit-gke-wnwkd 2/2 Running 0 85m kube-system gke-metrics-agent-5cq66 1/1 Running 0 85m kube-system gke-metrics-agent-cwnzn 1/1 Running 0 85m kube-system gke-metrics-agent-tdvx2 1/1 Running 0 85m kube-system konnectivity-agent-86cbc78d8-8sfzc 1/1 Running 0 85m kube-system konnectivity-agent-86cbc78d8-gvvnn 1/1 Running 0 85m kube-system konnectivity-agent-86cbc78d8-tbr2t 1/1 Running 0 86m kube-system konnectivity-agent-autoscaler-84559799b7-njczq 1/1 Running 0 86m kube-system kube-dns-584f56f967-65758 4/4 Running 0 86m kube-system kube-dns-584f56f967-jglv5 4/4 Running 0 85m kube-system kube-dns-autoscaler-9f89698b6-rn54z 1/1 Running 0 74m kube-system kube-proxy-gke-cluster-1-default-pool-a709ed39-hcc6 1/1 Running 0 84m kube-system kube-proxy-gke-cluster-1-default-pool-a709ed39-q497 1/1 Running 0 85m kube-system kube-proxy-gke-cluster-1-default-pool-a709ed39-zkg8 1/1 Running 0 85m kube-system l7-default-backend-5465dfc4ff-274zw 1/1 Running 0 86m kube-system metrics-server-v0.5.2-6f6d597469-xn6sx 2/2 Running 0 85m kube-system pdcsi-node-2xr6p

-

From a Vanilla Kubernetes cluster that recently experienced a DiskFull event on its CSI driver:

NAMESPACE NAME READY STATUS RESTARTS AGE app-nginx nginx 1/1 Running 0 76d apps-helm my-release-redis-master-0 1/1 Running 0 56d apps-helm my-release-redis-replicas-0 1/1 Running 0 56d apps-helm my-release-redis-replicas-1 1/1 Running 0 56d apps-helm my-release-redis-replicas-2 1/1 Running 0 56d calico-apiserver calico-apiserver-5444dfd6b4-nc9f6 1/1 Running 0 77d calico-apiserver calico-apiserver-5444dfd6b4-pkgwj 1/1 Running 0 77d calico-system calico-kube-controllers-67f85d7449-ctxdz 1/1 Running 1 (77d ago) 77d calico-system calico-node-tlqvh 1/1 Running 1 (77d ago) 77d calico-system calico-typha-7bc4d5557f-rb7d2 1/1 Running 2 (77d ago) 77d default csi-hostpath-socat-0 1/1 Running 0 63m default csi-hostpathplugin-0 8/8 Running 0 63m default csirbd-demo-pod 1/1 Running 0 76d kube-system coredns-64897985d-brsvc 1/1 Running 1 (77d ago) 77d kube-system coredns-64897985d-s9rsq 1/1 Running 1 (77d ago) 77d kube-system etcd-k8s-123-4 1/1 Running 1 (77d ago) 77d kube-system kube-apiserver-k8s-123-4 1/1 Running 1 (77d ago) 77d kube-system kube-controller-manager-k8s-123-4 1/1 Running 1 (77d ago) 77d kube-system kube-proxy-8mmzm 1/1 Running 1 (77d ago) 77d kube-system kube-scheduler-k8s-123-4 1/1 Running 1 (77d ago) 77d kube-system snapshot-controller-7f5d798964-6vz5m 1/1 Running 0 76d kube-system snapshot-controller-7f5d798964-mc59t 1/1 Running 0 76d rook-ceph csi-cephfsplugin-provisioner-5dc9cbcc87-9hjvh 6/6 Running 0 76d rook-ceph csi-cephfsplugin-qjpbn 3/3 Running 0 76d rook-ceph csi-rbdplugin-provisioner-58f584754c-gqw6b 6/6 Running 1 (43d ago) 76d rook-ceph csi-rbdplugin-x5vlg 3/3 Running 0 76d rook-ceph rook-ceph-mgr-a-f74657b66-bs6b2 0/1 Completed 1 76d rook-ceph rook-ceph-mgr-a-f74657b66-k8vz2 1/1 Running 0 11d rook-ceph rook-ceph-mon-a-75d9f6df4-446xc 0/1 Evicted 0 2d15h rook-ceph rook-ceph-mon-a-75d9f6df4-7j7pd 0/1 Completed 0 25d rook-ceph rook-ceph-mon-a-75d9f6df4-bsbwg 0/1 Evicted 0 2d15h rook-ceph rook-ceph-mon-a-75d9f6df4-c7rxf 0/1 Evicted 0 2d15h rook-ceph rook-ceph-mon-a-75d9f6df4-fktm4 0/1 Completed 0 42d rook-ceph rook-ceph-mon-a-75d9f6df4-fplth 0/1 Evicted 0 2d15h rook-ceph rook-ceph-mon-a-75d9f6df4-kctct 0/1 Completed 1 76d rook-ceph rook-ceph-mon-a-75d9f6df4-msx5n 1/1 Running 0 2d15h rook-ceph rook-ceph-mon-a-75d9f6df4-phmrb 0/1 Evicted 0 2d15h

-

Verify You Have a VolumeSnapshotClass That Has a CSI Driver

A CSI-enabled VolumeSnapshotClass is required for Commvault to orchestrate the creation of storage snapshots. Verify that your environment includes a VolumeSnapshotClass that has a CSI driver. A VolumeSnapshot is a storage-level snapshot of the underlying storage sub-system. Volume snapshots can be application-consistent or infrastructure/storage-consistent (default).

-

Command to run:

kubectl get volumesnapshotclass -

Example output from a Vanilla Kubernetes cluster with Ceph Raw Block Device (RBD) VolumeSnapshotClass installed and configured:

NAME DRIVER DELETIONPOLICY AGE csi-hostpath-snapclass hostpath.csi.k8s.io Delete 74mcsi-rbdplugin-snapclass rook-ceph.rbd.csi.ceph.com Delete 76d

If Necessary, Get Detailed Information About the VolumeSnapshotClass

-

Command to run:

kubectl describe volumesnapshotclass volumesnapshotclass_name

-

Example command:

kubectl describe volumesnapshotclass csi-rbdplugin-snapclass -

Example output:

Name: csi-rbdplugin-snapclass Namespace: Labels: <none> Annotations: <none> API Version: snapshot.storage.k8s.io/v1 Deletion Policy: Delete Driver: rook-ceph.rbd.csi.ceph.com Kind: VolumeSnapshotClass Metadata: Creation Timestamp: 2022-03-14T23:05:36Z Generation: 1 Managed Fields: API Version: snapshot.storage.k8s.io/v1 Fields Type: FieldsV1 fieldsV1: f:deletionPolicy: f:driver: f:parameters: .: f:clusterID: f:csi.storage.k8s.io/snapshotter-secret-name: f:csi.storage.k8s.io/snapshotter-secret-namespace: Manager: kubectl-create Operation: Update Time: 2022-03-14T23:05:36Z Resource Version: 67371 UID: 153a1fac-783c-4b71-9d57-f0e161650100 Parameters: Cluster ID: rook-ceph csi.storage.k8s.io/snapshotter-secret-name: rook-csi-rbd-provisioner csi.storage.k8s.io/snapshotter-secret-namespace: rook-ceph Events: <none>

The example output shows that the underlying driver is a CSI driver (rook-ceph.rbd.csi.ceph.com) and that a VolumeSnapshotClass is registered.

Volume snapshots is not installed by default in many cloud-managed Kubernetes services. For example, a default installation Azure Kubernetes Service (AKS) and Google Kubernetes Engine (GKE) clusters returns "No resources found", which indicates that no VolumeSnapshotClass is registered and snapshot backup is not possible without installing the volume snapshot controller and associated StorageClass and Custom Resource Definitions. For instructions to install the CSI external-snapshotter, see kubernetes-csi / external-snapshotter.

Verify the API Version Includes snapshot.storage.k8s.io

The CSI external-snapshotter supports both v1 and v1beta1 snapshot APIs. Verify that your installed external-snapshotter supports the snapshot.storage.k8s.io/v1 API.

-

Command to run:

kubectl describe volumesnapshotclass volumesnapshotclass_name

-

Example command:

kubectl describe volumesnapshotclass csi-rbdplugin-snapclass -

Example output:

Name: csi-rbdplugin-snapclass Namespace: Labels: <none> Annotations: <none> API Version: snapshot.storage.k8s.io/v1 Deletion Policy: Delete Driver: rook-ceph.rbd.csi.ceph.com Kind: VolumeSnapshotClass Metadata: Creation Timestamp: 2022-03-14T23:05:36Z Generation: 1 Managed Fields: API Version: snapshot.storage.k8s.io/v1 Fields Type: FieldsV1 fieldsV1: f:deletionPolicy: f:driver: f:parameters: .: f:clusterID: f:csi.storage.k8s.io/snapshotter-secret-name: f:csi.storage.k8s.io/snapshotter-secret-namespace: Manager: kubectl-create Operation: Update Time: 2022-03-14T23:05:36Z Resource Version: 67371 UID: 153a1fac-783c-4b71-9d57-f0e161650100 Parameters: Cluster ID: rook-ceph csi.storage.k8s.io/snapshotter-secret-name: rook-csi-rbd-provisioner csi.storage.k8s.io/snapshotter-secret-namespace: rook-ceph Events: <none>

Verify You Have a snapshot-controller Pod in the Running Status

To install and configure the CSI external-snapshotter, the Kubernetes Volume Snapshot CRDs, volume snapshot controller, and snapshot validation webhook components are installed. Verify that the snapshot-controller is installed and has a status of Running, which is required for the external snapshotter to handle requests to orchestrate snapshots.

-

Command to run:

kubectl get pods -A | grep -i snapshot -

Example output from a Vanilla Kubernetes cluster with the external-snapshotter installed and running:

kube-system snapshot-controller-7f5d798964-6vz5m 1/1 Running 0 76d kube-system snapshot-controller-7f5d798964-mc59t 1/1 Running 0 76d

Verify There Are No Orphan Resources Created by Commvault

Note

If a backup or restore operation is interrupted, Commvault might not have a chance to remove temporary volumesnapshots, volumes, and Commvault worker pods. For simple identification and manual removal, if required, Commvault attaches labels to these resources. For more information, see Restrictions and Known Issues for Kubernetes.

-

Command to run:

kubectl get pods,pvc,volumesnapshot -l cv-backup-admin= --all-namespaces -

Example output from a cluster that has no orphaned objects:

No resources found

If Necessary, Delete Orphaned Resources

If orphaned resources are listed, delete them.

-

Command to run:

kubectl delete pod|pvc|volumesnapshot -n namespace resource_name

Verify the Commvault Worker Pod Container Image

You can validate the container image that Commvault uses to perform backups and restores by viewing the log file:

-

For backups: vsbkp.log

-

For restores: vsrst.log

For example, the following example log file for a failed backup shows how the container image is identified:

CK8sInfo::MountVM() - Backup failed for app [nginx-pod-3]. Error [1002:0xEDDD03EA:{CK8sInfo::Backup(395)} + {K8sApp::CreateWorker(1662)/Int.1002.0x3EA-Error creating worker pod. [0xFFFFFFFF:{K8sUtils::CreatePod(108)} + {K8sUtils::CreateNamespaced(510)/ErrNo.-1.(Unknown error -1)-Create Api failed for namespace quota config {"apiVersion":"v1","kind":"Pod","metadata":{"labels":{"cv-backup-admin":""},"name":"nginx-pvc-nginx-pod-3-cv-120813","namespace":"quota"},"spec":{"containers":[{"command":["/bin/sh","-c","tail -f /dev/null"],"image":"internal-registry.yourdomain.com:5000/e2e-test/library/ <image_name>","name":"cvcontainer","resources":{"limits":{"cpu":"500m","memory":"128Mi"},"requests":{"cpu":"5m","memory":"16Mi"}},"volumeMounts":[{"mountPath":"/mnt/nginx-pvc-nginx-pod-3-cv-120813","name":"data"}]}],"imagePullSecrets":[{"name":"abc"}],"serviceAccountName":"default","volumes":[{"name":"data","persistentVolumeClaim":{"claimName":"nginx-pvc-nginx-pod-3-cv-120813"}}]}}}]}]

By default, container image information is included in the logs only when a job fails. To always include the image information, increase the debug level to 2 or higher in the CommCell Console. For information, see Configuring Log File Settings in the CommCell Console.

For more information about the container image that is used, see System Requirements for Kubernetes.