Commvault HyperScale X provides flexible topologies for connecting the nodes to different network infrastructures. There are numerous potential switch configurations that may need to be in place depending on the selected network topology.

The following general rules must be followed for configuring switch ports for HyperScale X:

-

All interfaces configured for the HyperScale X nodes should be configured as access/untagged ports, except when connecting the nodes to multiple VLANs.

-

When using Active-Backup bonding, no port-channel or link-aggregation is needed to be configured on the switch.

-

When using Link Aggregation Control Protocol (LACP), each pair of ports should be configured as an active port-channel, and not configured to negotiate the aggregation protocol.

-

Storage pool interfaces should be dedicated for inter-node communication and should not be configured as VLAN trunks with multiple VLANs on those interfaces.

-

Storage pool networks and data protection networks must be on separate physical interfaces.

Frequently Asked Questions

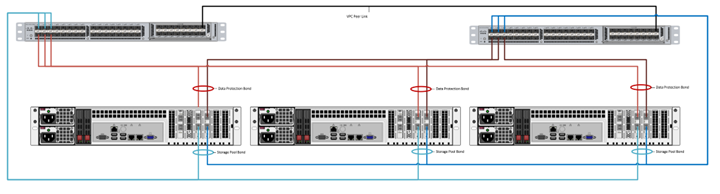

Can HyperScale X be dual-homed to multiple switches using Cisco Virtual Port Channel (VPC)?

Yes, HyperScale X supports using LACP over Cisco VPC.

HyperScale X fully supports multi-switch link aggregation implementations from most vendors including Cisco VPC, Arrista mLAG, and Mellanox mLAG.

See the following image for an example of the cabling configuration using Cisco VPC:

Note

When configuring the network for Commvault HyperScale X nodes with multi-switch link aggregation implementations, use the configuration steps described in LACP Bonding.

While deploying a large number of nodes, there is a need to connect them to different switches to improve availability in the event of switch/rack failure. Is this supported?

A single HyperScale X storage pool can be deployed across multiple switches/racks/availability zones as long as the following conditions are met:

-

All the nodes reside in the same physical building.

-

Data protection interfaces in all the nodes reside in the same layer 2 subnet (VLAN).

-

Storage pool interfaces in all the nodes reside in the same layer 2 subnet (VLAN).

-

Inter-node communication does not traverse a firewall or WAN link.

HyperScale X leverages a distributed file system, and all reads/writes must communicate to multiple nodes within the pool to service each operation. High-latency introduced by firewalls, routers, WAN links will severely impact the performance and stability of the cluster, so latency must be factored in, when planning the HyperScale X deployment. Also pay careful attention to available bandwidth between the nodes and between zones containing HyperScale X nodes. Even the smallest N4 HyperScale X nodes can fully saturate a 10GbE storage pool interface when under load or when performing recovery operations. Ensure that inter-switch/rack/zone links have enough bandwidth to service all the nodes within each zone.

When using bonding (active-backup or LACP) are all ports for the nodes configured into one set of port-channels, or does each node get its own port-channel?

When using bonding, each node should have its own pair of port-channels. For example if you have 3 nodes, you must create a total of 6 port channels as follows:

-

3 port-channels on the data protection network (one per node)

-

3 port channels for the storage pool network (one per node)

HyperScale X nodes need to be connected to multiple independent networks, and require more than four (4) network interfaces per node. Can additional NICs be added to the nodes to support this configuration?

Yes, adding additional physical interfaces to HyperScale X nodes is supported as long as the following conditions are met:

-

Sufficient free slots exist on the hardware to support additional NIC cards.

-

All nodes are configured to access all the configured networks.

-

Additional NICs for Commvault HyperScale X must be purchased through Commvault. Please contact your Commvault representative for more information. Each appliance model supports up to 1 additional interface card.

Does Commvault HyperScale X support jumbo frames?

Jumbo frames are supported on both the data protection and storage pool networks.

However, in order to use Jumbo frames, it must be enabled everywhere, which includes the sending device, receiving device and every network switch, router and firewall in between.

For this reason Jumbo frames are recommended only on the storage pool network as it is relatively simple to implement. As the nodes only talk to each other on a private network, Jumbo frames have to enabled on the HyperScale nodes and the storage pool connections such as the switch(s) they are connected to.

On the data protection network, if for example there are 5000 clients, all the 5000 clients must have Jumbo frames enabled, along with the network devices such as switches, routers, etc. of which some of them may not support Jumbo frames.

For additional information on configuring Jumbo frames, see the guidelines provided in How to enable jumbo frames for network interfaces in Red Hat Enterprise Linux on the Redhat Customer Portal.

Organizations should work with their network administrator to determine if Jumbo frames are supported in their environment. In the event of failure or poor network performance, Commvault support may request that Jumbo frames be disabled, or change the maximum transmission unit (MTU) size, as part of troubleshooting.